SUPVIS Justin

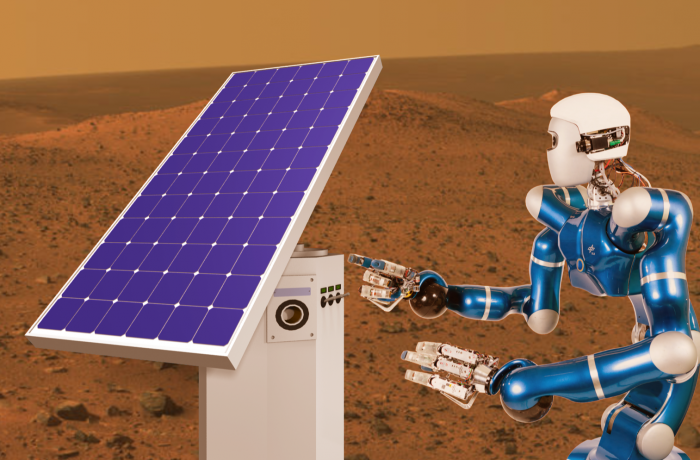

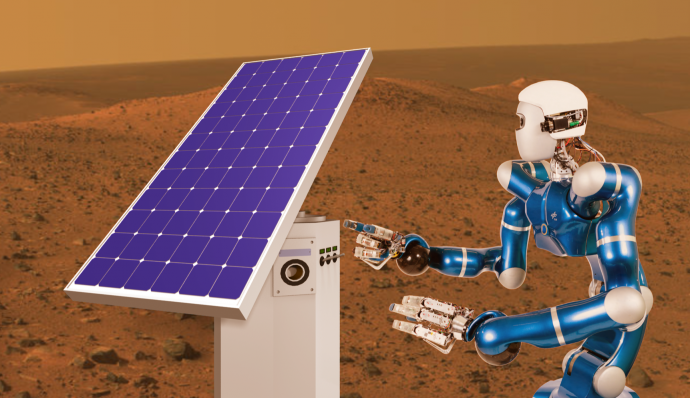

As the human race continue its exploration farther into the solar system, extraterrestrial habitats can be placed on asteroids, moons, and planets, such as Mars. These habitats would require on continuous monitoring and maintenance in order to remain safe and functional. However, permanent astronaut on-site may not be available for this task. A possible alternative to direct inspection is remote inspection with a teleoperated robot. In order to cope with long communication round-trip time and possible data package loss, we propose to utilize a knowledge-driven supervised-autonomy approach to control a robot. The information to interact with the environment is thereby shared between the robot and the operator. With this concept, an intuitive human-robot interface can be designed to solve even complex manipulation tasks. This interface will be utilized as a part of the SUPVIS Justin experiment within the ESA METERON project.

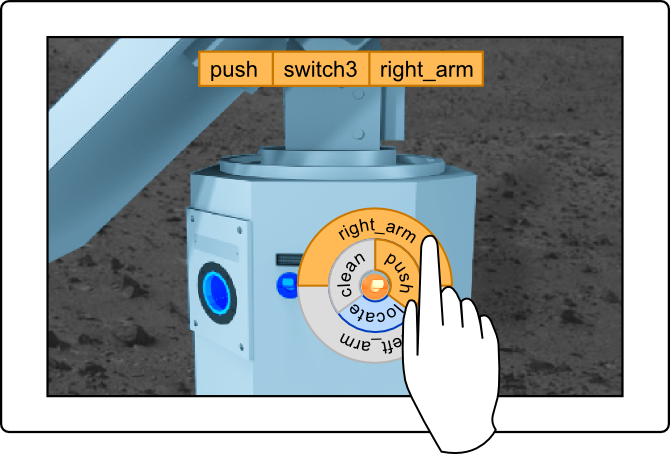

The SUPVIS Justin experiment addresses the scenario of planetary exploration. The experiment aims to demonstrate the possibilities of commanding a robot to carry out complex dexterous tasks with significant communication round-trip time. SUPVIS Justin will address the local intelligence of the robot required to interpret and execute an astronaut’s command. The UI concept proposed in this work forms the basis for providing the astronaut with sufficient flexibility to tailor task sequences, while maintaining simplicity and intuitiveness.

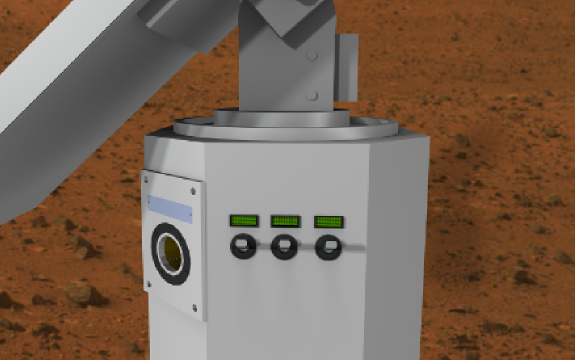

SUPVIS Justin will take place in a mock-up environment. In particular the humanoid robot Rollin’ Justin, stationed on earth at the German Aerospace Center (DLR) in Oberpfaffenhofen, Germany, will be commanded from the International Space Station (ISS). A fully functional Mars solar farm environment consisting of an array of solar panel units will be constructed at DLR for the mission’s experiment. The robot will not solve the entire task autonomously. Instead, it will be semi-autonomously guided by an astronaut. High-level commands are therefore commanded to the robot via a novel human-robot interface. As a result, the robot reasons on symbolic combinations of possible actions to solve the task, proceeded by the concrete task execution on the geometric level. By realizing this concept, the robot on the planetary surface acts like a coworker of the on-orbit astronaut.