Human-Robot Interface

In the near future, service robots will be introduced to varying scenarios disaster response, and space applications. With multiple manipulators and sophisticated sensor systems, the capabilities of such robots will exceed the capabilities of today’s service robots, such as vacuum robots, by far. As these new capabilities introduce higher complexity, commanding robots gets more difficult and therefore raises the need for autonomously acting robots. However, solving all kind of service tasks fully autonomous in unstructured and changing environments without human intervention is a challenging task. A possible solution to circumvent this issue is supervised autonomy, where an operator remote controls the robot on a high level of abstraction.

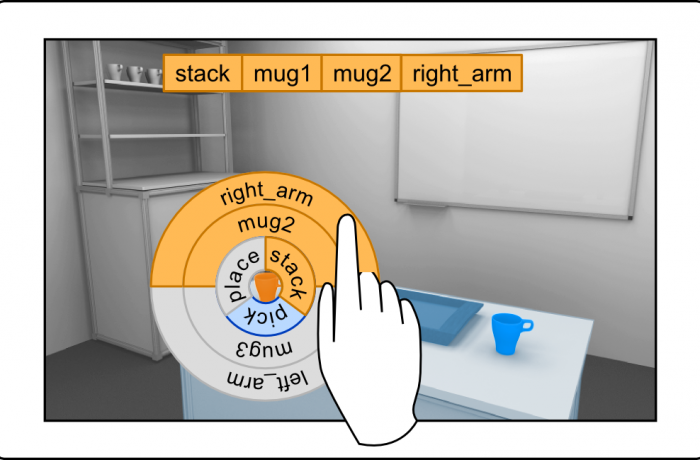

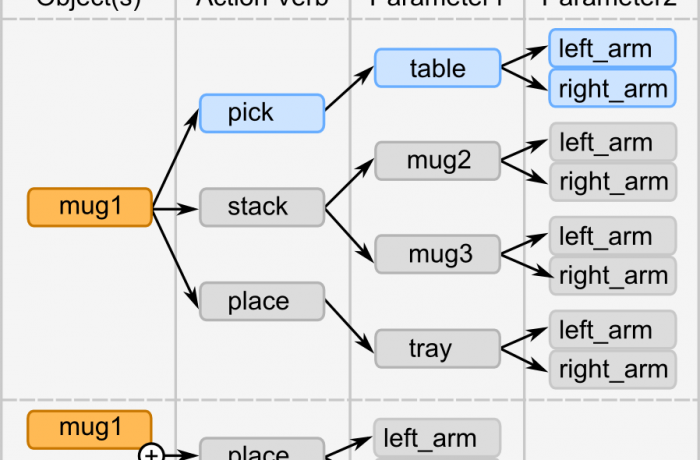

In order to command complex manipulation tasks to a service robot, it is crucial to provide an intuitive high level Human-Robot Interface (HRI). The main challenge hereby lies in guiding the operators attention intuitively by visualizing the required information, while simultaneously hiding irrelevant information to prevent the operator from distractions. It is important to narrow the operators possible decisions w.r.t. the current state of the environment and task since the number of possible manipulation actions increases drastically with the number of available objects. An appropriate knowledge representation along with extensive reasoning mechanisms is therefore mandatory.

We choose an object-centered high level of abstraction to command a robot. The symbolic and geometric reasoning is thereby shifted from the operator to the robot allowing the operator to focus on the task rather then on robot-specific capabilities. Evaluating the possibility of actions in advance allows us to simplify the operator frontend by providing only possible actions for commanding the robot. By directly displaying video from the robot, we obtain an egocentric perspective which we augment with information about the internal state of the robot. We provide a mechanism for the operator to directly interact with the displayed information by applying a point-and-click paradigm. This way, commanding manipulation tasks is reduced to intuitive selection of the objects to be manipulated replicating the real world experience of the operator.